There are no items in your cart

Add More

Add More

| Item Details | Price | ||

|---|---|---|---|

Process optimization streamlines operations to maximize efficiency, quality, and profitability. It involves systematically analyzing and improving workflows to eliminate waste, reduce variation, and better utilize resources. Typical goals include cost reduction, quality improvement, time savings, and resource utilization (e.g. Lean Six Sigma programs often achieve ~15–25% cost savings and 20–30% productivity gains). Modern process optimization increasingly relies on data and technology – for example, machine learning and AI can predict bottlenecks and automate routine tasks. In practice, optimized processes shorten cycle times (by ~40–60% on average) and boost throughput (often 20–35%), giving organizations a competitive edge and supporting sustainability through lower energy and material use.

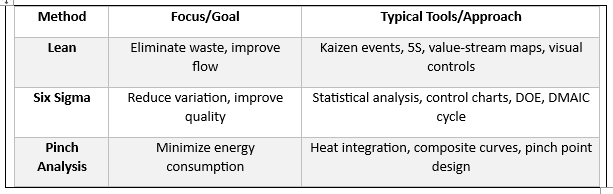

Lean (originating from Toyota’s Production System) focuses on eliminating non-value-added activities and establishing smooth flow. It uses simple, “people-powered” tools like 5S workplace organization, Kaizen (continuous improvement) events, value-stream mapping, and visual controls to identify and remove waste. Lean projects often start with quick shop-floor changes – for example, reorganizing layouts or standardizing work – to immediately reduce delays and defects. Over time, Lean cultures engage all employees in ongoing improvement.

Challenges: Requires sustained cultural change and employee buy-in. Improvements often depend on frontline engagement and management support. Without ongoing discipline, processes may revert to wasteful patterns.

Six Sigma is a data-driven quality methodology designed to reduce process variation and defects. It treats every process as a measurable system and applies statistical tools in a structured DMAIC (Define–Measure–Analyze–Improve–Control) cycle. Typical Six Sigma projects, led by trained Black Belts and Green Belts, use control charts, design of experiments, and failure analysis to find root causes of defects. The end goal is to push a process toward “six sigma” performance (about 3.4 defects per million opportunities) by making outputs highly consistent.

Challenges: Implementation can be time- and data-intensive. Six Sigma requires significant statistical training and disciplined project management. Early projects may need months of data collection and analysis, and ROI can lag initial investment in training and tools.

Many organizations combine Lean and Six Sigma (often called Lean Six Sigma), leveraging both waste reduction and variation control. Lean-driven Kaizen events can quickly eliminate obvious waste, while Six Sigma tools tackle deeper statistical issues. In practice, Lean streamlining is often followed by Six Sigma analysis for remaining problems. This synergy can yield greater overall improvement: one review notes Lean and Six Sigma together “provide the best possible quality, cost, and delivery” by using complementary tools.

Pinch analysis is a graphical method for minimizing energy usage in process industries. It combines all process hot streams (needing cooling) and cold streams (needing heating) into temperature-enthalpy composite curves, then identifies the “pinch point” where the curves approach closest. This pinch point represents the thermodynamic constraint: by matching hot and cold streams properly, the plant can meet its heating and cooling needs using the least external energy. In essence, pinch analysis computes the minimum feasible energy required and guides the design of heat exchanger networks and utility systems to achieve it.

Challenges: Pinch requires detailed process thermodynamic data and expert analysis. It’s most effective for thermal processes; purely non-thermal wastes (like chemical reaction yield) are outside its scope. Skilled engineers are needed to interpret pinch results and redesign utility systems. In practice, pinch studies for existing plants can be complex and must be guided by experienced consultants.

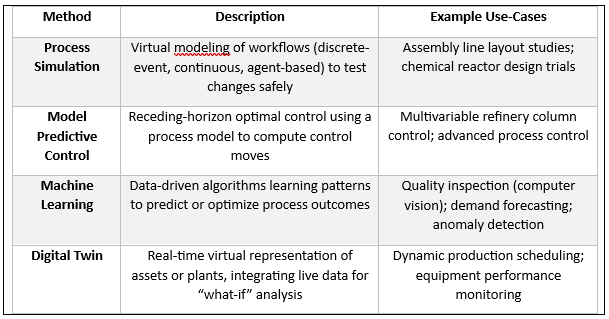

Modern process simulation uses computer models to create virtual replicas of production systems. By encoding equipment specifications, material flows, and operational rules, simulation software can mimic discrete production lines or continuous processes. Engineers run “what-if” scenarios on the model to test layout changes, scheduling rules, maintenance plans, etc., without disrupting actual operations. Different approaches include discrete-event simulation (tracking individual jobs through a factory), agent-based models (simulating interactions of many components), and continuous or dynamic simulation (common in chemical processes). Advanced platforms even incorporate real-time data and ML for live updates.

Challenges: Creating accurate simulation models requires detailed data and expertise. Inaccurate assumptions or missing data can lead to misleading results. Building and maintaining a high-fidelity model can be time-consuming, and simulations may oversimplify real-world variability. Additionally, licensing and running large-scale simulations can involve significant IT and software costs.

Model Predictive Control is an advanced digital control method for real-time process optimization. MPC uses a mathematical model of the process (often linearized around the operating point) to predict future outputs. At each control step, it solves a constrained optimization problem over a finite horizon to find the best control moves that will follow a desired trajectory. Only the first move is implemented, and the process repeats at the next time step. This receding-horizon strategy lets MPC anticipate future disturbances and handle multiple inputs/outputs simultaneously. It is widely used in process industries (especially chemical plants and refineries) to control distillation columns, reactors, and other complex systems.

Challenges: MPC requires a reliable process model and significant computation. Solving an optimization problem at each control interval demands processing power and robust software. Poor model accuracy can degrade performance. Tuning MPC controllers (choosing horizons, weights, etc.) is also complex and may require iterative refinement. In safety-critical environments, ensuring MPC robustness under faults is an additional concern.

Machine Learning applies data-driven algorithms to recognize patterns and make predictions that optimize processes. In industrial systems, ML models (such as neural networks, decision trees, and reinforcement learning agents) can learn from historical sensor data to predict equipment failures, detect defects, forecast demand, or optimize process settings. For example, ML-based computer vision inspects parts for defects, and time-series models forecast energy demand or raw material usage.

Challenges: ML requires high-quality data and careful model design. It can overfit or produce spurious correlations if data are insufficient or biased. Industrial data streams often need cleaning and integration. Domain expertise is needed to select features and interpret results. Moreover, ML solutions can be hard to certify in critical processes due to lack of interpretability and guarantees (unlike classical controls). Organizations must also address data privacy and ensure cross-team collaboration (operations, IT, data scientists).

A Digital Twin is a high-fidelity virtual model of a physical asset, process, or system, continuously updated with real-time data. Unlike static simulation, a digital twin is linked via IoT sensors and controls to its real counterpart, reflecting current operating conditions. Twins can exist at different scopes: from individual products (e.g. as-built CAD models) to equipment assets (fed by PLC/IoT data) to entire factories or supply chains. Once deployed, a digital twin supports advanced “what-if” scenario analysis, predictive maintenance, and optimization. For example, asset twins enable predictive maintenance by forecasting failures and optimizing yield or energy use. Factory-scale twins allow dynamic scheduling and layout planning, automatically simulating changes on the production line before physical implementation.

Challenges: Building a digital twin is complex. It requires integrating many data sources (PLCs, ERP, MES, etc.) into one coherent model and ensuring data quality. The initial effort and cost can be high, involving sensors, network infrastructure, and software. Twins also pose cybersecurity concerns (exposing detailed process data) and require governance of a “single source of truth.” Finally, a twin’s accuracy depends on its underlying models; simplifying assumptions may limit how closely it matches reality. Thus, many implementations start modestly (e.g. a single production line) and scale up.

Chemical & Process Industries: Pinch analysis and steady-state simulation have long optimized refinery and petrochemical plants’ heat integration (e.g. pinch targets in crackers and reformers). Model Predictive Control is now standard in chemical plants (distillation, polymerization) to maintain optimal product yield. Plant digital twins (sometimes called “smart plant” models) are emerging to monitor equipment health and process bottlenecks in real time.

Energy & Utilities: In power generation and oil & gas, optimization spans control and analytics. Refinery control rooms use MPC and advanced control to maximize throughput under safety constraints. Smart grid operators and plants employ ML and digital twins for load forecasting, real-time balancing, and maintenance. Notably, General Electric’s use of digital twins for gas turbines and power plants has yielded significant gains: GE reduced unplanned downtime ~40% and cut maintenance costs ~20% by combining digital twin models with ML-driven analytics

Six Sigma: Benefits – Data-driven defect reduction and process capability improvement; Challenges – Heavy reliance on statistics and training, projects can be slow to implement without good data.

Pinch Analysis: Benefits – Systematic identification of energy-saving opportunities; can significantly cut utility costs; Challenges – Specialized to thermal networks; requires detailed thermodynamic data and expertise.

Process Simulation: Benefits – Enables virtual testing of changes with no production risk; often shortens project timelines and improves resource planning; Challenges – Building accurate models is resource-intensive and requires validation against real behavior.

MPC: Benefits – Optimizes multivariable processes under constraints and delays, often improving stability and efficiency; Challenges – High computational demand and dependency on model accuracy.

Machine Learning: Benefits – Finds complex patterns (e.g. optimal process settings) and enables adaptive control; boosts predictive maintenance, quality, and scheduling; Challenges – Needs large, clean data sets and integration into workflows; model transparency and trust can be issues.

Digital Twins: Benefits – Holistic, real-time optimization and “what-if” analysis across assets; can significantly reduce costs (e.g. ~5%–10% in case studies) and downtime; Challenges – Complex data integration and initial investment; requires robust data management and cybersecurity.

Rohm & Haas (Pinch): A Texas chemical plant used pinch analysis to identify projects saving 2.2 MMBtu and $7.7 million annually in energy costs.

BMW (ML – Quality): BMW’s deployment of ML-based visual inspection reduced part defects by ~40%, greatly cutting rework.

ArcelorMittal (ML – Maintenance): ArcelorMittal’s ML sensors in a steel plant predicted failures, cutting unplanned downtime by 20% and maintenance costs by 15%.

GE Power Plants (Digital Twin + ML): GE combined digital twins and ML in gas turbines and power plants, reducing unplanned downtime by 40% and boosting efficiency ~10%.

Industrial Factory (Digital Twin): A McKinsey-reported case used a factory-scale digital twin to optimize production scheduling, reducing overtime costs by 5–7%.

These examples illustrate how diverse techniques—both long-established and cutting-edge—drive optimization in real operations. By choosing the right mix of tools (often combining methods) for a given industry, organizations can achieve significant efficiency gains, cost savings, and performance improvements.